The Shifting Bottleneck Conundrum: How AI Is Reshaping the Software Development Lifecycle

The impact of AI: Real gains or shifting bottlenecks?

Are you planning moving to data-driven software management to keep on top of your quality assurance status, your security findings and development velocity? Thinking about building it yourself using Elasticsearch, Logstash and Kibana or maybe just using your Splunk instance for this? Read more to find out why this may or may not be a good idea.

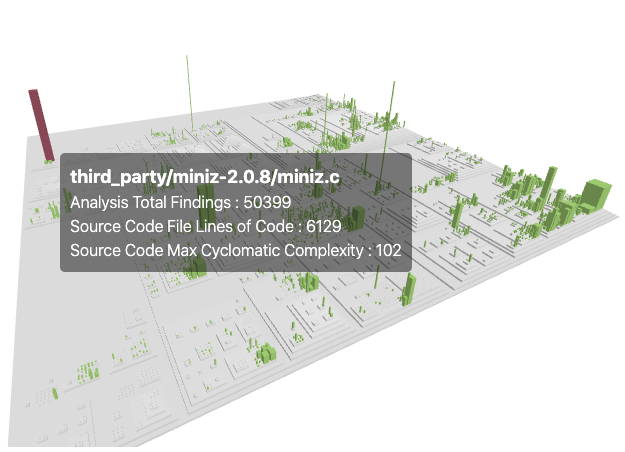

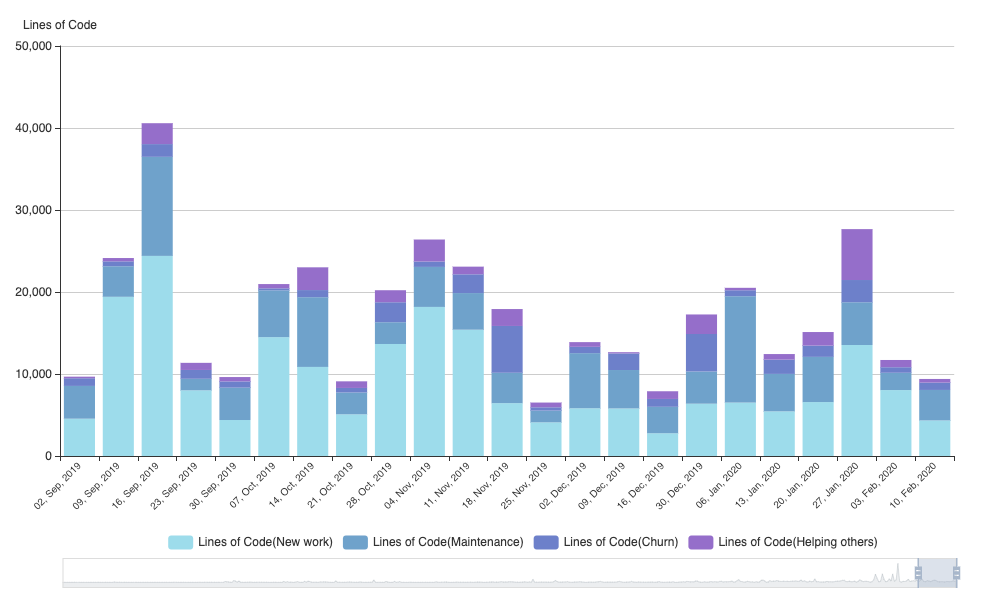

We are again and again seeing the challenge in software developing organisations to keep track and stay on top of the different tools and processes within the software lifecycle. Managing many engineering teams who are using a range of DevOps tools is not easy. Most managers and team leaders do not have time to log into each individual tool like Jira, Jenkins, GitHub, their test and security tools, etc. just to find out what the overall state of their projects is. Simultaneously, these tools hold all the information to meaningfully judge release and quality criteria, security risks and team productivity goals.

Each organisation has their own approach to address the above challenges. One common approach is to delegate the problem down into a manual reporting structure. Hire a project manager that gets individual data from each tool and potentially each team member. Collate that data into Excel sheets and have the project manager send you regular updates.

The downsides are plentiful: Additional reporting and management overhead can be immense, engineers get disrupted and burdened with activities that reduce their productivity, and more often than not the collected data is already out of date by the time it has been aggregated and presented back to management.

Which brings us to the second approach: Building a software analytics and management instance yourself. This is often driven by an engineering or IT team that believes in data-driven decision making and likes to show the value to their management team, but without spending on new tools. Often this starts as a proof-of-concept using the ELK stack or an existing Splunk instance.

ELK stands for Elasticsearch, Logstash and Kibana as well as other tools that are part of an open source stack for data storage, manipulation and visualisation. The ELK stack is one of the most popular solutions to collect streaming data from various sources and store them in a distributed, scalable NoSQL data base. Moreover, it provides full-text indexing and real-time querying making it ideal for large volumes of streaming data, analysing that data and visualising the data streams and query results through its Kibana dashboards.

ELK gained great popularity with IT and network departments, log and anomaly investigations and for whipping up quick visualisations to display IT infrastructure states. Other common tools we see that fit the same bill are Grafana or Splunk.

However, the systems above are not intended to be primary data stores and or to build relational data base operation on top of it — they also do not possess the ACID properties offered by relational databases — but are much better at read-only and analyse systems. And this is where a lot of trouble begins.

The Elk Test or sometimes called the Moose Test is an evasive driving manoeuvre to test stability of cars. It gained some notoriety of quite spectacular pictures of newly to be released vehicles toppling over.

Software management has its own ELK challenge. As mentioned, the ELK stack is good for analytics and streaming data, but is this really what a software management team needs?

Some key requirements are:

As you can see this, this requires a combination of traditional database activities with analytics and visualisations as you know them from ELK stack tools. Moreover, you want to have the ability not only look into the results, but understand the causes by deep-diving into the original full data sets such as the source code or commits.

So where to go from here?

Starting a journey to data-driven software management with in-house and homegrown solutions starts out quickly and cheaply and more often than not ends in an unmaintainable expensive obstacle.

If you are Google, Facebook, AWS etc. you probably have the capabilities and resources to roll your own solutions. If you are not part of the elite club the motivation might be quite different to develop some in-house solution: perceived cost effectiveness and control. This, however, often does not go as planned and common failure modes we observe are:

From our experience (mis)using existing stacks can work well to come up with a proof of concept for illustration to stakeholder, but rarely scales into production. Moreover, it will consume significant resources while you are basically building a product for a market of one.

Having said that, it is not all futile. If you are mostly interested in visualising logging data and streaming events without actual on-demand manipulation of past data, drill downs into source code or detailed results management, then using a system originally intended for a different purpose might still work. Especially, if you plan this only for one defined purpose and team without the intention to roll this out across teams and silos.

There are a few conclusions here. One is, be sure to understand what you intend to build and what the real needs are. In other words, don’t get an Ikea flatpack when you essentially want a car. No matter how you assemble it, it might not meet your intended use case … other than the initial mockup of a car.

Secondly, commercial software intelligence solutions are designed with scalability, flexibility and extensions in mind. They can grow with your needs and tool portfolios.

Thirdly, your in-house resource are not free (at least one wouldn’t assume so) and probably better used for more productive activities.

If you like to learn more around the ROI of software intelligence and buy vs. building your own solution, reach out to some of our friendly team at Logilica and let us assist you in planning your digital transformation journey. An ELK might well be what you need, as long as it passes the test.

References

[1] Logilica Insights. https://logilica.com/

[2] ELK stack: https://en.wikipedia.org/wiki/Elasticsearch

[3] Data-Driven Software Engineering Management: https://medium.com/@logilica/data-driven-software-engineering-management-which-data-61f044a4ded1

[4] Software Intelligence: https://en.wikipedia.org/wiki/Software_intelligence