The Shifting Bottleneck Conundrum: How AI Is Reshaping the Software Development Lifecycle

The impact of AI: Real gains or shifting bottlenecks?

In today’s data-driven environment, there is truly no end to the overwhelming amount of information that both contributors and users have access to. People often spend hours combing through logs trying to piece information into usable goods. This turns what could be swift, data-driven decisions, into time-consuming challenges that slow down innovation.

For this modern age of engineering, artificial intelligence (AI) is a necessity. Integrating AI into your products covers numerous potential user needs, convenience through availability, saving a limitless amount of time and unlocking complex insights. With large language models (LLM), AI-powered assistants can sift through vast amounts of data to find the relevant details that you’re looking for. It surfaces patterns, offers recommendations, and predicts your needs, while ensuring valuable information isn’t missed or overlooked.

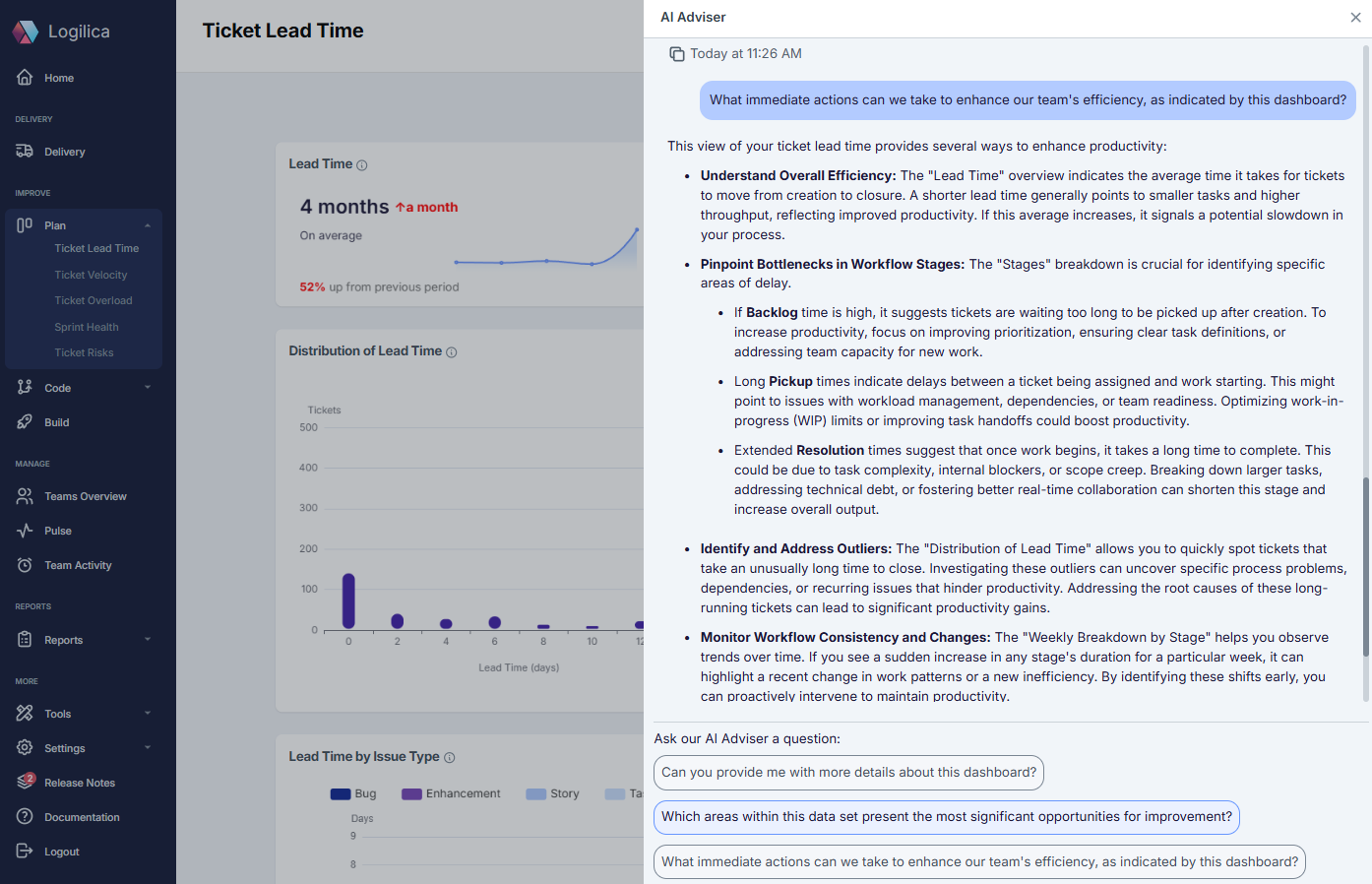

Inspired by AI, the Logilica Labs team has developed an intuitive and user-friendly AI assistant designed specifically for Logilica’s data model on the Software Development Life Cycle (SDLC) pipeline. It interacts with the user’s queries about their performance metrics from their projects. This article showcases the intricacies and findings in developing our initial AI assistant. While we have matured from that since, we still believe this article gives some valuable insights.

Our AI assistant responds to questions relating to the SDLC pipeline data available in Logilica’s data model. This process involves extracting keywords from the prompt, generating a data query, obtaining the data and summarising the relevant insights for the user’s needs. The following stages outline how our AI Advisor goes through this process.

This is all possible because our initial database setup involved designing a unique schema for Logilica’s data, which stores the associated metadata and its vector embeddings. Once the database was populated through a data ingestion pipeline, our AI Advisor converts user queries into embeddings and conducts similarity searches. The embeddings are compared against a vector database that stores indexed queries and data-related concepts.

Logilica’s AI Advisor is designed to handle edge cases such as hallucinations and irrelevant data by incorporating mechanisms that improve its accuracy and reliability. To prevent hallucinations, the system uses a confidence-based approach, where it tries to identify when it lacks sufficient information to answer a query accurately. In this instance, instead of fabricating a response, it clarifies the uncertainty to the user.

For irrelevant or unanswerable queries, our advisor has built-in safeguards that detect when the question doesn’t align with the available data. For instance, if the model encounters a query like “What is my name?” it recognises that the information isn’t accessible and prompts the user to provide more context or reformulate the question. This approach ensures that our AI Advisor doesn’t generate speculative or incorrect answers, maintaining the quality and reliability of responses.

Building on our experience described in this article we are building a much enhanced version 2.0, which is available in private beta right now. We will go into more details of that architecture and design in the near future, but you can see a sneak peak below or contact us to get access to it.

Our AI-powered assistant is revolutionising how we interact with data, making it easier and faster to extract valuable insights. By understanding user queries, generating structured searches, and continuous improvements in AI technology we will only enhance our capabilities, making them indispensable for businesses and individuals alike. Whether you’re a developer, project manager, or data analyst, an AI Advisor can help you unlock the full potential of data available across your SDLC pipeline, transforming overwhelming data into meaningful insights.